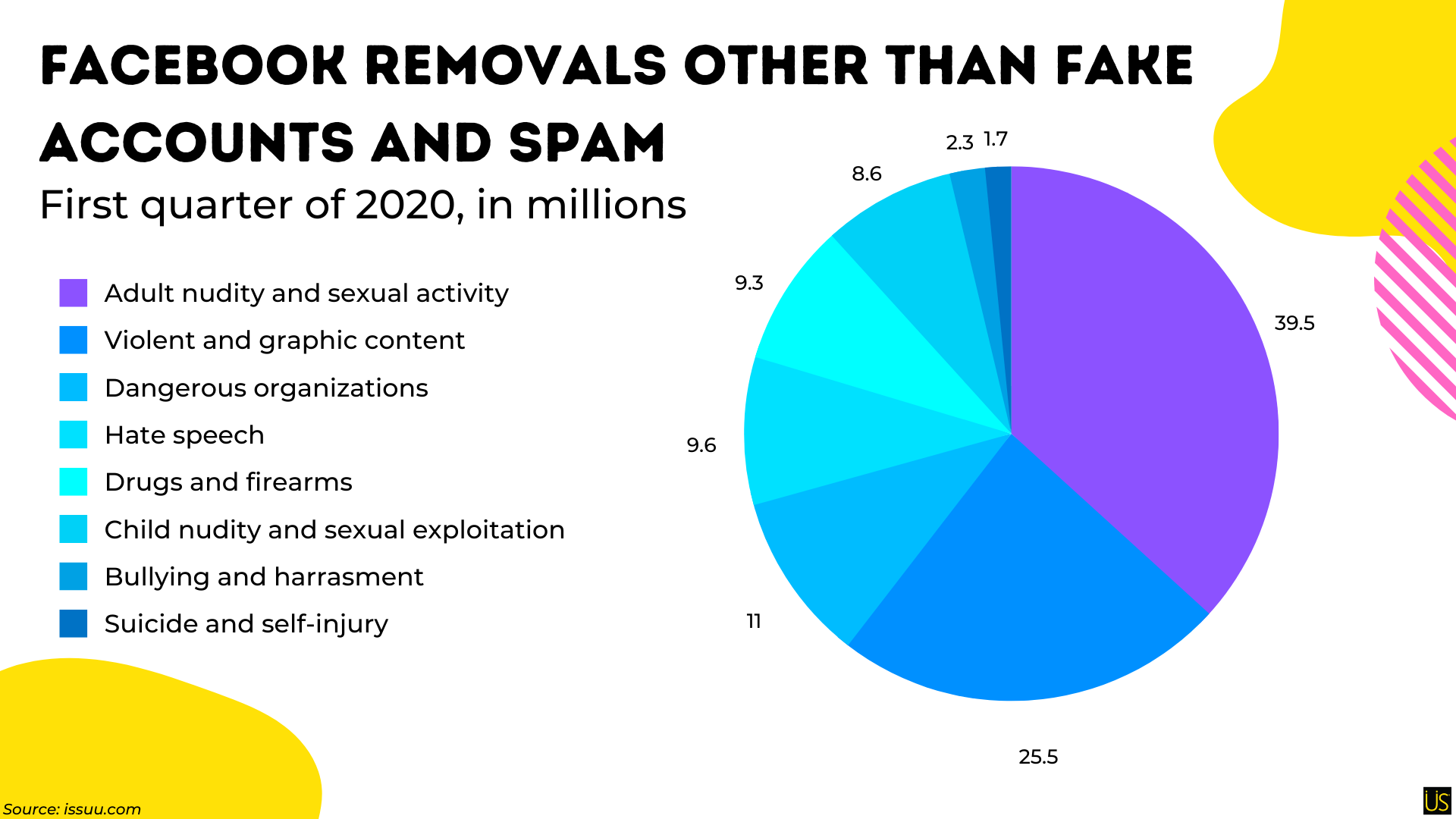

Although automated moderators take down 95% of reported content, it still falls short in detecting nudity, firearms, sexual activity, and drugs on live streams and numerous posts they receive in a single day. Better than AI, human content moderators handle the thorough screening of multimedia content posted.

The eyes of a human content moderator filter and review user-generated content to ensure the safety and privacy of the platforms. They guarantee compliance and protect users and digital brands from malicious content.

Human Content Moderation

On Facebook alone, there are over 3 million reported content by artificial intelligence and user flagging. Human content moderation is a crucial core function where a person ensures the safety of a platform.

What is mostly overseen by AI, a human can screen for any violations of the predetermined rules and guidelines of a platform.

The roles and responsibilities of a human content moderator are necessary to protect the users from any exposure to unnecessary content which includes:

- Violence

- Sex and nudity

- Sexual exploitation

- Crimes

- Terrorism and Extremism

- Scam and Trolling

- Drug Abuse

- Spam and hate

- And other harmful and illegal contents.

What is the role of human content moderation?

Behind the vibrant platforms, a human content moderator’s role requires making critical calls to moderate and eliminate harmful content. And this means they have to sort out millions of content for age restriction, spam, language and more to keep platforms safe.

Human content moderators work on:

- Build or maintain a company’s reputation of positivity.

- Protect users of certain ages against content for mature audiences.

- Eliminate harassment and bullying, disturbing content, and offensive content on social media platforms, blogs, posts, and forums.

- Understand client policies and ensure that user’s posts comply with the guidelines of the brand.

- Conduct brand-building activities to set a company ahead of its competitors including writing public relation reports.

- Identify areas of improvement by aligning the brand’s vision to its social media design model.

- Communicate with the users and the team to accommodate new policies to the best of the company and user’s interest.

According to the NYU Stern report in 2022, content moderators hold the platforms together. Their main responsibility is to disallow users to descend into anarchy. Some of their notable findings on major platforms include:

- Facebook removed 1.9 billion spam, from posts to comments, 1.7 billion fake accounts, and 107.5 million content that elicit disturbing events like nudity, violence, and hate speech.

- Youtube removed over 2 million channels for scams, misleading content, promotion of violence, harassment and cyberbullying, nudity, and child safety.

- Twitter locked, suspended, and banned over 1 million accounts for hateful conduct, abuse, impersonation, violent threats, child exploitation, and leaking private information.

Human content creators handle 800 to 1000 multimedia content daily. The content they review includes videos, image, text, forum posts, shared links, blogs, and comments. Sometimes they have to remove the same content multiple times as users continue to upload content already marked for deletion.

The roles and responsibilities of a content moderator is subjective. It depends on who the target audience is and what the brand vision is.

What type of tasks does a human content moderator do?

Among the myriad company guidelines and policies, content moderation changes to cater different industries, business requirements, and company’s demand. Below are the common types of content moderation to help companies review to use on their online community.

1. Pre-moderation: Before user-generated content reaches the active users, human moderators screen it to decide if it is appropriate for publication, should be reported, or should be blocked (user-generated content is frequently flagged by automatic systems).

High levels of content control are possible with this strategy, but it also runs the danger of causing content bottlenecks when moderation teams are unable to handle sudden increases in content.

2. Post-moderation: On the platform, content is shown for active users while being held in a moderation queue But this creates dangers in terms of law, morality, and commerce because improper and even illegal content may be accessed before it is removed.

Some significant content platforms, like Facebook, have added proactive post-moderation components. This includes tracking the online habits of users and banning or blocking them if their content violates platform community standards.

3. Real-time moderation: Some streaming videos require real-time moderation. In this scenario, moderators will keep an eye on some or all of the content and take it down if problems arise.

To ensure everything is on track, moderators occasionally spot-check the content or simply stop by for the first few minutes. This is often seen in YouTube streaming where when a violation occurs, the video will automatically be privatized.

4. Reactive moderation: Platforms with a ‘Report this’ button next to the content users have uploaded is where reactive moderation appears.

Reactive moderation relies on the active viewers to identify offensive or unlawful content. But the system is often abused. A user can report any post made by another user and mark it for moderation if they want to specifically target that user.

Some users might even target your content moderators with this approach, reporting offensive stuff just to waste their time.

5. Distributed moderation: Although democratic, this strategy is rarely applied. Content is distributed to a group (including other members of the community and/or platform staff) and they are tasked with reviewing each piece of user-generated content.

Role of Automated Moderation that helps human content moderation

Malicious content that the AI can remove before the human content moderator reduces the mental toll that human moderators experience. Here are some areas in which automated moderation helps in content moderation.

Content Modification: Blurring out, greyscaling, and muting of audio are AI-powered tools that can reduce the negative exposure of a content moderator to harmful content.

Prioritizing/Triage: Providing urgency and toxicity ratings to every content based on previous data, the human moderators can then manually sequence them to know when they should take their mental break.

Content Labeling: The associated text with the video and the Virtual Question Answering (VQA) systems to answer questions about content to allow a moderator to make a decision without looking at the content.

What are the differences between human content moderation and automated (AI) moderation?

Automated moderation and human moderators are working together to make an online platform safe. This is to make up for what they’re lacking in different areas.

Why do we still need human content moderators?

Humans understand context: Facebook has build moderator algorithms to delete 99.9% of spam and 99.3% of terrorist propaganda. But it also prompted the deletion of posts against discrimination and civil rights abuse. Because automated moderation relies on the language used not on the context it was used for.

AI lacks the ability to discern the context. A string of totally acceptable words can make up improper content. It is also necessary to consider certain cultural specific areas where human content moderators can.

Automated moderators bypass censored content: Automated moderators have profanity filters to identify hate content. But users often hide profanities by changing some letters of the words with symbols making it difficult for automated moderators to detect, sort, and eliminate this content.

Humans can read between the lines. They can comprehend varying negative connotations across certain cultures. They can also learn about the brand’s nature and adapt their content moderation to your business goals.

Humans can make authentic conversations: Automated moderators cannot hold personalized conversations with compassion and empathy. Human moderators can answer questions, provide real-time customer support, and resolve complex issues.

Humans can track misinformation: Automated moderators often approve content that promote disinformation that can directly impact your consumer. They can identify inaccuracies, flag malicious and harmful fake news from any multimedia, and protect the users from unwanted misinformation.

What are the challenges of human content moderation?

Facebook paid a $52 million settlement covering 11,000 moderators who suffered PTSD in the job. The mental toll of the job to humans was shared on BBC news, where Facebook moderators process over a hundred “tickets” of images, videos, and text which can contain graphic violence, suicide, exploitation, and abuse.

Human content moderators are susceptible to the following effects:

- Post Traumatic Stress Disorder (PTSD)

- Panic Attacks

- Anxiety

- Depression

- Self-harm

- Normalization and desensitization to disturbing language

- Distortion of beliefs or believing conspiracy theories because of constant exposure

That is why human content moderators usually have larger compensation packages, flexible schedules, and periodical visits to psychotherapy and psychiatric care.

The ethics of human content moderation

Human content moderators protect the users and the brand reputation but what protects them?

Many moderators claim that during the recruitment process, they were given purposefully imprecise job descriptions that were drastically misrepresented. The saddening part is that they have low wages, emotional toll, and poor working conditions.

Some companies even labeled content moderation as an entry level job. They do not disclose the quantity and categories of distressing content that human content moderators will face on every day of their work. The ethical approach before hiring is an honest job description to prepare them for what they’ll see, hear, and watch.

Pre-screening for mental health can eliminate applicants who have a history of mental illness or who have traits that would make them more susceptible to psychological harm. Even when an employee claims they don’t have any illness, a mental health evaluation is a process to protect them.

There should be exposure restrictions to harmful contents. And they should have scheduled breaks to decompress toxicity after watching and reading disturbing content.

Access to thorough mental health treatments at work is something that many businesses provide. And former moderators should still have access to mental

Former moderators should still have access to mental health treatment post-employment.

How to outsource human content moderation?

There are 3.96 billion users online and customers rely their purchasing decisions on user-generated content more. You can have an in-house content moderator to detect and remove contents that do not align in your company policies. But when the number of contents to review increases, outsourcing human content moderators is a cost-efficient solution.

What should you look for when outsourcing human content moderators?

Level of expertise: Service providers experience in content moderation.

- How many clients have they worked with?

- Who are those clients?

- Do they have an automated moderator to work with their human moderators?

Talent Acquisition: Service providers recruitment and employee care.

- Do they pre-screen human moderators for psychological vulnerability before exposing them to disturbing materials?

- Do they have their own team of human content moderators or do they hire underpaid and untrained freelancers?

Employee Protection: Service providers care for their employees’ mental wellbeing.

- Are the employee’s working conditions safe and secured?

- Does the company prioritize the wellbeing of and health of their human moderators?

- What is the service provider’s attrition rate?