Whether you’re a small business owner or the Digital Strategy Manager for a multinational company, your website needs to stand out from the 1.13 billion websites worldwide on the internet. A site audit is the first step in formulating an effective SEO (Search Engine Optimisation) strategy.

It shows parts of your website that present potential issues that must be addressed, challenges to overcome, and opportunities to improve. But what is an SEO Audit, who should do it and why is it so important?

What’s an SEO Site Audit?

An SEO site audit is a top-to-bottom review of a site that points out on-page issues and presents suggestions and recommendations for fixing said issues and ways to improve current implementations.

So who should do it?

Ideally, it should be an SEO specialist. You can do it yourself, but do you fix your home plumbing, and electricals or service your own car? I hope not. It’s best left to the professionals.

If your business is located in Australia, the United States, or Canada, it doesn’t mean the audit has to be completed by someone in the same location. There is that wonderful technology called the internet.

Outsourcing to a remote digital team in the Philippines, like USource, is straightforward, and efficient and it will be a significant cost saving on employing an in-house SEO or hiring a locally based digital agency.

Why should you optimize for search?

Maybe we should start with what happens if you haven’t optimized for search — little or no search traffic, lost leads, lost customers to competitors, lost revenue.

Search is one of the most common activities done on the internet, second only to reading and sending emails. Whether it’s putting together your next Outfit of the Day (OOTD), researching a new hobby, learning “how-to” fix a leaky tap, or finding an answer to a problem, people turn to search engines — Google, Bing, Yahoo, or even Ask.com to find out more about something or someone.

And given the sheer volume of searches being done every second of every day (Google takes in 89.3 billion searches per month as of 2022), SEO presents an opportunity for businesses to rake in some serious traffic and other long-term benefits at a lower cost compared to Pay-Per-Click (PPC) or Adwords (Though that’s not to say that search ads should be ignored.

In fact, PPC ads are critical to success in search and can deliver strong results when used in tandem with a carefully planned SEO strategy).

But before you can reap the benefits of SEO, a site audit is necessary. You will get to see under the bonnet of the car, so to speak. You will see what’s working and what needs to be changed, or fixed, by your SEO specialist to improve website visibility in the search engine results.

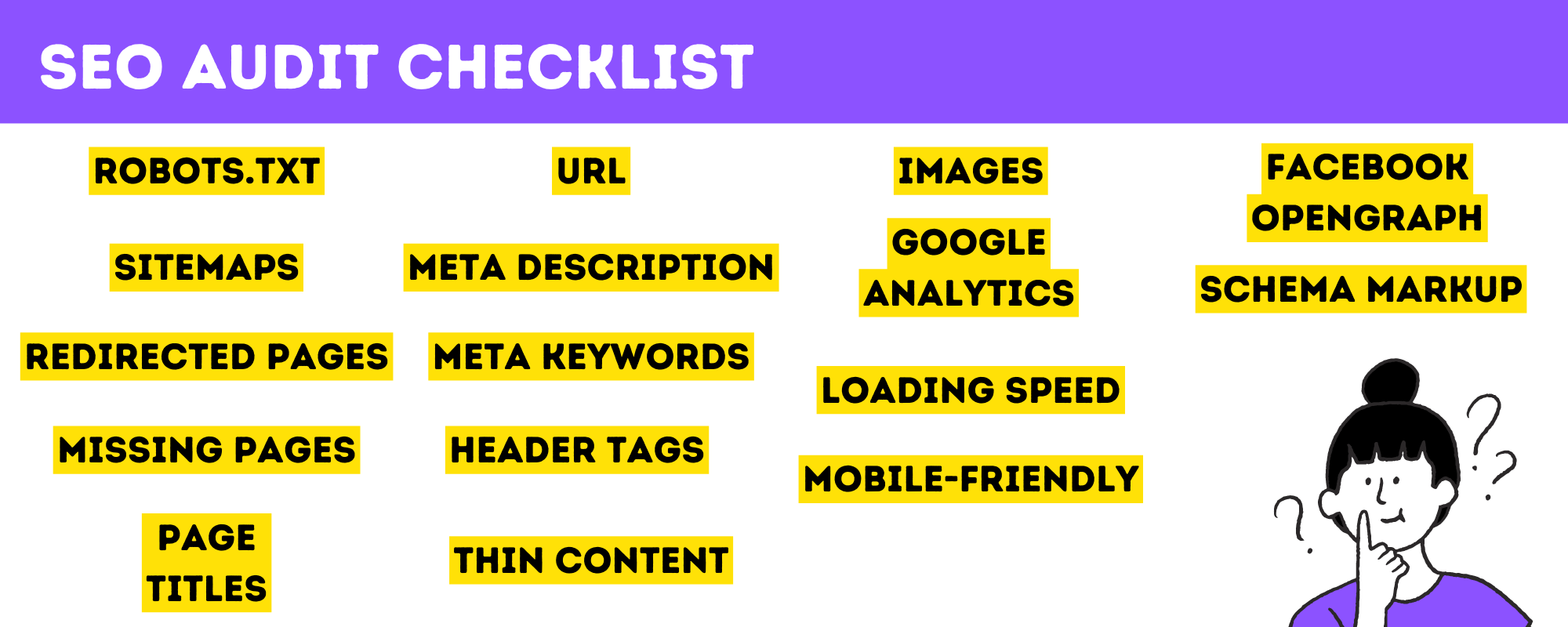

Key Components of a Site Audit

A site audit starts with your site’s basic information such as its domain name, IP address, server location as well as the number of pages from your site that Google has already indexed. There should also be a chart listing possible issues on the site and an indication if there are issues on that particular item. The next pages should include detailed information about a specific aspect of your website and ways to improve it.

What are the benefits of an SEO Audit?

If you’ve never performed an audit on your website, you’re missing out on a lot of chances to optimize and boost its functionality. And you might also miss out on possibilities to move up in search engine results.

When you perform an SEO audit, your website is crawled to find various metrics and potential problems. This offers your SEO strategy a number of advantages.

It shows you any crawling and indexing issues

A beautiful website is virtually useless if the search engine bots can’t crawl or index it. If your robots.txt file is blocking important pages, those pages practically don’t exist as far as the search engines are concerned. This is similar to locking the front doors of your shop, but expecting customers to walk inside. It’s not going to happen.

It points out missing data and opportunities for pages to rank better

Pages that have content but poorly optimized title tags or meta description presents an opportunity to target keywords. For example, an article that’s meant to rank for “SEO Audit” will have a hard time in the search results if the keyword doesn’t appear in the Title, URL, Meta Description, and content.

It shows technical errors

Technical issues can prevent a site from ranking well and provides a poor user experience for users.

Some errors include the following:

- Images without an Alt text

- Missing or duplicate Title tags or Meta Descriptions

- Title tags that are too short

- And the worst offense of all: Duplicate content. Google can actually penalize you for this.

It points out potential penalties before it happens

Certain practices like “keyword stuffing” and “keyword cloaking” are considered “Black Hat” SEO tactics that should never be used. If Google catches you employing these shady methods, prepare to get a dose of mob justice from the algorithms aimed at weeding out websites and pages that breach their webmaster guidelines.

Penalties can range from lowered ranks to having your entire site removed from the search results. And Google has been known to show no mercy even to big companies when it comes to enforcing their rules. BMW got called out by Matt Cutts himself and had their PageRank reduced to zero back in 2006 for using doorway pages and J.C. Penney was ratted out by The New York Times in a full-blown investigative piece in 2011.

An audit can point out if your site might have issues that Google might interpret as “Black Hat”, sparing your SEO specialist the trouble of having to submit a reconsideration request, and preventing you from getting no traffic to your website.

Your SEO Audit should have detailed information on the following:

Robots.txt

It’s a hidden page in your site containing instructions for search engine bots. It should include a list of pages that you don’t want to be indexed like the backend or any page that might have personal or sensitive information. However, it should not block important pages or pages you want to show up in search results.

Also, a link to your XML Sitemap should be included on this page so that your site can be crawled quicker.

Sitemaps

There are two kinds of sitemaps: XML & HTML. The link to your XML sitemap should be included in your Robots.txt file so that the bots can crawl your site easier.

If the XML Sitemap is for bots, then the HTML sitemap is meant for users. A link to this is usually placed on the footer and it has a list of links pointing to parts of your website.

Redirected Pages

A redirection is a technique where entering a certain URL in your browser will take you to another page. There are two commonly used redirects: the 301 (Permanent) and the 302 (Temporary) redirects.

A common scenario where a 301 redirect is used is when a page with important content is deleted. A 301 can be put in place so that whenever somebody types in or click on the old URL or opens it in their browser’s bookmarks will be taken to the new URL instead of being greeted with a 404.

A 302 redirect, which is temporary, can be done if a page is under maintenance but will go live eventually.

Missing Pages

These are pages that have been rendered inaccessible to both search engines and users. The two most common errors are the 404 (Page not found) and 403 (Forbidden) errors.

A 404 happens if the page you’re trying to access has been deleted. These pages don’t do well in search results as Google does not want to lead its users to a dead end. The only solution to this is implementing a 301 redirect to a relevant page. In addition, creating a custom designed 404 page with hyperlinks to important pages on your website will help with the overall Customer Experience (CX).

Some sites even use this as an opportunity to further promote their brand. You can play “Space Invaders” on Kualo’s 404 page, a Stephen Hawking-esque voice will greet you when you run into a 404 in Waark and a 404 literally breaks Blizzard Entertainment’s site and even pokes fun at you by saying “Grats. You broke it.”.

A 403 happens when you’ve stumbled onto a page you’re not supposed to access and the server is stopping you from entering the page. This can happen for all sorts of reasons and how it can be resolved will depend on how the error came about.

Page Titles

These are the “Big, blue links” in search results and it also appears on the browser tab the page has been opened on. Any words in the title tags that match the keywords a user enters will be highlighted in bold which acts as an indication of the page’s potential relevance to the keyword.

Ideally, a page’s title should describe the content inside the page, the keywords you want the page to rank for, and the name of your site. They should also be between 70 characters long for desktop and 475 pixels wide for mobile. If it’s too long, Google will not display it in full, too short and you might be missing an opportunity to rank for the right keywords. Also, no two pages should have the same titles to avoid confusing the search bots and a potential penalty from Google.

URL

This is the unique address of your page and is displayed under the title in green text in the search results and it also appears in your browser’s address bar. It should be concise, relevant, and descriptive. Invalid characters, URL parameters, uppercase letters, spaces, and other unnecessary characters should also be avoided. And finally, they should not be too long to facilitate sharing on social media.

Meta Description

This snippet of the text lies underneath the URL. This can be used to further describe the page and the content inside. The meta description should not exceed 156 characters for desktop or 757 pixels wide for mobile otherwise Google will truncate it and two separate pages should not have the same meta description.

Like the page title, Google will highlight text in the meta description if it matches the keyword(s) entered.

Meta Keywords

Back in the day, meta keywords were used to make it easier for search engines to decipher what a page is about. But as the technology behind search engines becomes more sophisticated, they are now able to understand the content within a page easier and without the need for meta keywords. Using this should be avoided as it only exposes your targeted keywords to the competition.

Header Tags

Header tags act like the headlines and subheadings of a newspaper. They come in levels from H1 to H6 with H1 being the highest. You should have only one H1 tag at the top of your page and it should contain your most important keyword/s. Having more than one of the other header tags on your page is acceptable as long as it’s used to structure your content.

Thin Content

If your page has too little content, it will hold little to no value for both the search engine and your visitors. This can be remedied by your copywriter.

Ideally, the minimum word count on your pages should be 300 words or more (as an example, this article has about 2000 words or more).

Images

Adding an alt text can help visitors to your site understand what an image is if it fails to load. But it is also used by search engines to understand what the image is about since they cannot read an image the same way humans do and it can also help your images rank for image search.

Also, images with large file sizes can affect your site’s loading speed and should be compressed and resized.

Google Analytics

Setting up a Google Analytics account can help keep track of all sorts of SEO-related data such as traffic from organic search results, time on site, bounce rate, and many more.

Google Analytics is presently used by roughly 28.1 million websites, and it is anticipated that this figure will increase over the coming years. That’s because it ranks first among the best online analytics tools.

It can also be used to obtain data from other channels for inbound traffic such as ads and it also allows you to track conversions so you can see where your potential customers stop short of the finish line.

Loading Speed

Page load time is influenced by various factors, such as page size, server speed, and user’s internet connection. Ideally, a page should load in under 2 seconds, with mobile devices aiming for less than 1 second.

You can measure page load speed using tools like Google Analytics or Pingdom, browser extensions, or manually with a stopwatch.

To optimize load speed, compress and choose appropriate file formats for images, minimize plugins and third-party scripts, enable browser caching, and utilize a content delivery network (CDN) for global content distribution and reduced data travel distance.

Mobile-friendly

Mobile search has surpassed search on desktops and laptops. And the gap continues to grow every year. Google released its first mobile-first index and in a bid to make the web better for mobile devices, and it has urged website owners to make their sites mobile-friendly by moving from m-dot URLs to responsive design.

The mobile version of the website is used for indexing and ranking by Google’s mobile-first indexing. In May 2016, the process began, and by March 2020, 70% of websites had moved to mobile-first indexing.

Due to the pandemic, Google postponed the deadline for all sites to make the switch to mobile-first indexing from September 2020 to March 2021.

With this, your site should be able to adjust to the size of your screen, have buttons that are large enough to be tapped via touchscreen, the header should remain at the top of your screen when you scroll down and have menus that users can open and close.

Facebook OpenGraph

OpenGraph turns a link to your website into a rich snippet with customizable metadata when you post it on Facebook. It’s highly recommended especially if you use Facebook to engage and interact with your users.

Facebook still displays relevant content published in groups, pages you follow, or by friends even if the search yields no results. It also displays well-liked items that Facebook users have already shared.

Open Graph tags are the source of each headline and image.

When someone sends you a link through direct messaging on an app that supports the Open Graph protocol, such as iMessage, Slack, and obviously Facebook’s Messenger and WhatsApp, Open Graph tags also assist in creating a snippet.

And speaking of rich snippets…

Schema Markup

Have you ever seen unique rich snippets when you search for certain things on Google? Or even just a simple 1-5 star rating on a product or the author of an article?

That was done using Schema. It’s a collection of microdata that you can add to your site’s HTML file so that it can show up on Google’s search results as a rich snippet.

The actual data is known as structured data, and schema is a language used to represent it.

Your data should be structured to improve communication with search engines.

Google gives users better results when it has a deeper understanding of entities.

After gathering data from structured data, items like rich cards, rich snippets, and the knowledge panel appear on SERPs.

Start with the first step

There is a Chinese proverb that says, “A journey of a thousand miles begins with a single step”.

At first glance, implementing SEO can be a tall order. Google has about 200 ranking factors, most of them unknown. Knowing where to start, what’s most important, and what will have the biggest impact in delivering relevant and meaningful traffic to your website, which converts, can be daunting.

An SEO Audit is not only the “first step”, it’s a guide in taking the right steps.